Variational Recursive Joint Estimation of Dense Scene Structure and Camera Motion from Monocular Image Sequences

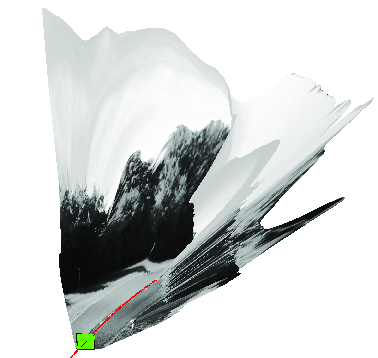

Principal investigators: Florian Becker, Frank Lenzen, Jörg H. Kappes, Christoph SchnörrWe present an approach to jointly estimating camera motion and dense structure of a static scene in terms of depth maps from monocular image sequences in driver-assistance scenarios. At each instant of time, only two consecutive frames are processed as input data of a joint estimator that fully exploits second-order information of the corresponding optimization problem and effectively copes with the non-convexity due to both the imaging geometry and the manifold of motion parameters. Additionally, carefully designed Gaussian approximations enable probabilistic inference based on locally varying confidence and globally varying sensitivity due to the epipolar geometry, with respect to the high-dimensional depth map estimation. Embedding the resulting joint estimator in an online recursive framework achieves a pronounced spatio-temporal filtering effect and robustness.

Becker et al. (2013).

Input Image Sequences and HCI Benchmark Database

Most image sequences used in Becker et al. (2013) and Becker et al. (2011) are available for download here. They are part of database that aims at providing a benchmark for computer vision algorithms in the context of automotive applications. Details on the acquisition procedure of the image sequences are documented in Meister et al. (2012).

Results and Supplemental Material

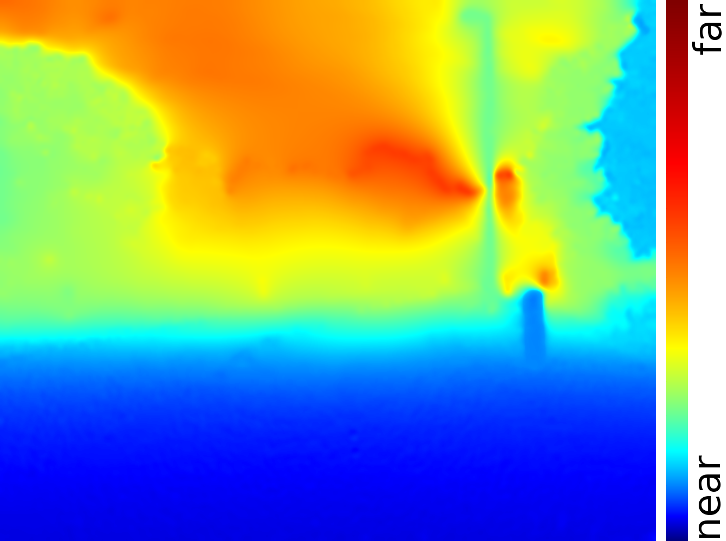

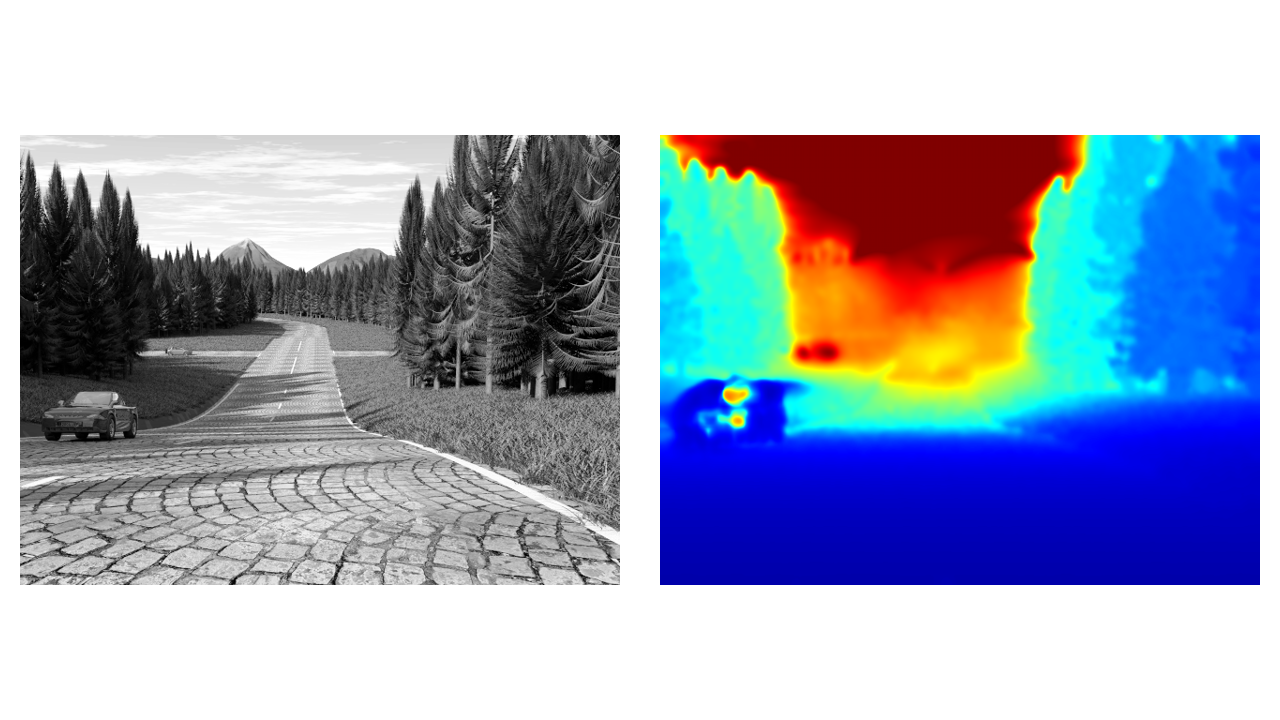

The proposed methods was applied to six real and one synthetic image sequences. In most sequences, only minor motions (pedestrians, trees) exist to fit the static scene assumption. This assumption is violated in the Junction and enpeda sequences due to moving objects (cars) and leads to distortions in the depth map which are, however, locally and temporally restricted to the occurrence of the non-static elements.

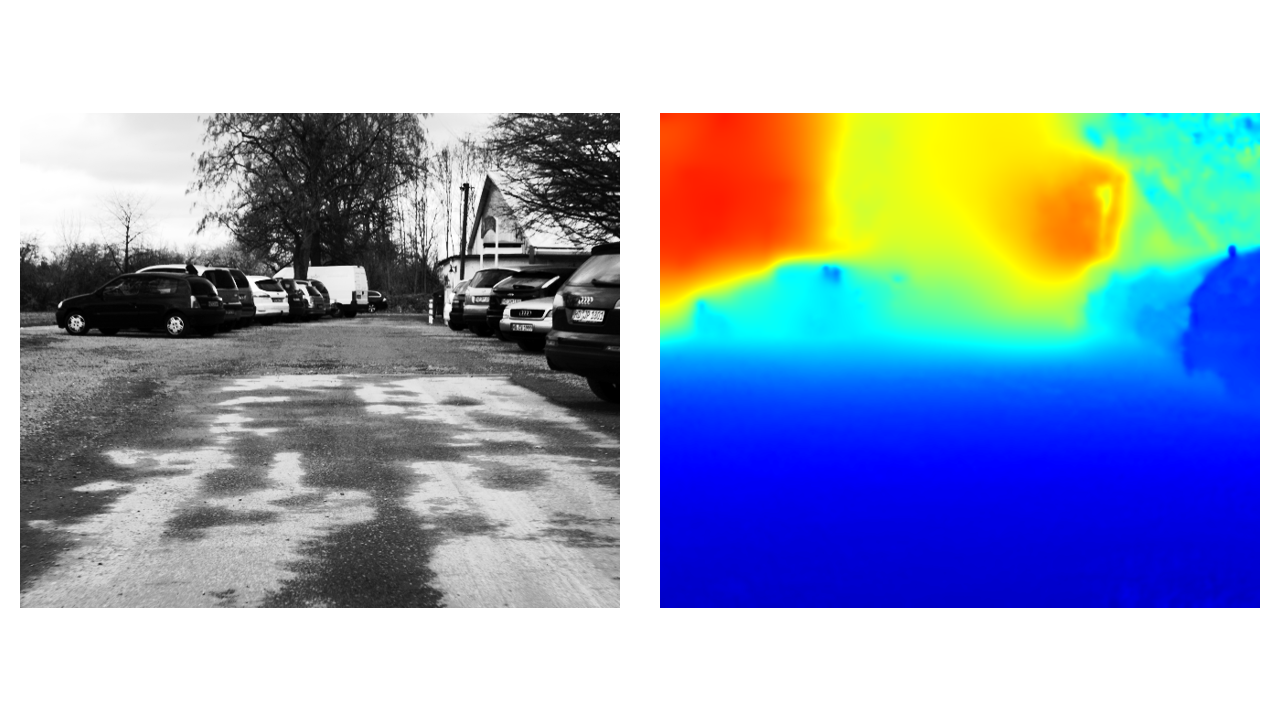

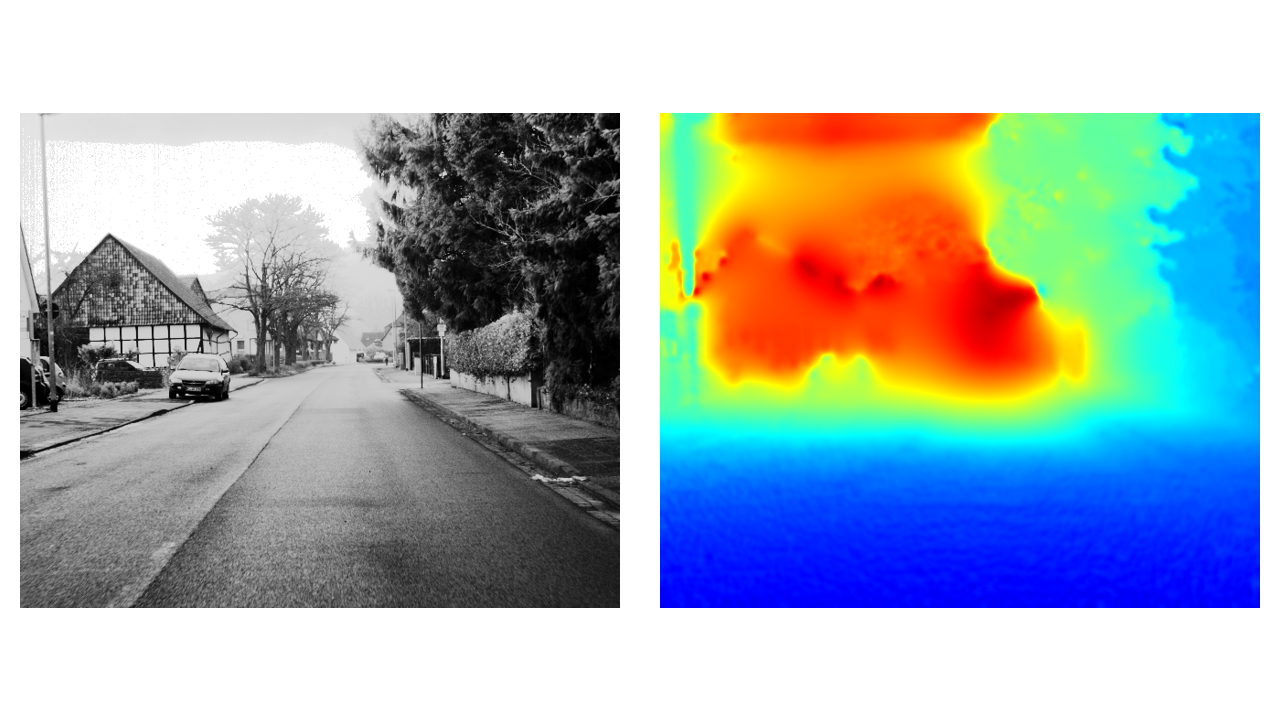

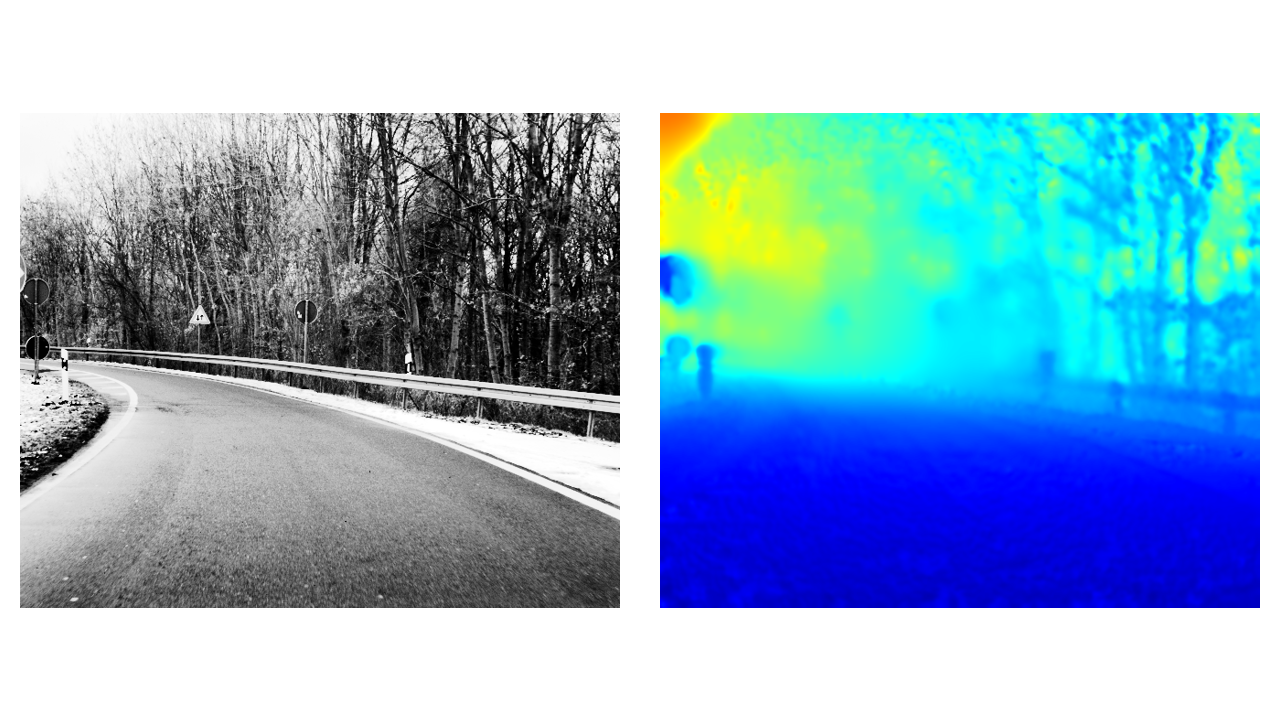

Visualization details: all images were encoded using ffmpeg/Linux with codec mpeg4, codecflag divx and output format mpg, and thus contain compression artifacts. The gray-values of the input images are reduced to 8 bit depth and a histogram equalization was applied to increase the contrast for better visualization. Depth maps are encoded using a non-linear color map. As the global scale of the scene cannot be reconstructed from the monocular sequence, the depth maps are only unique up to a factor, which is approximately constant for each sequence.

-

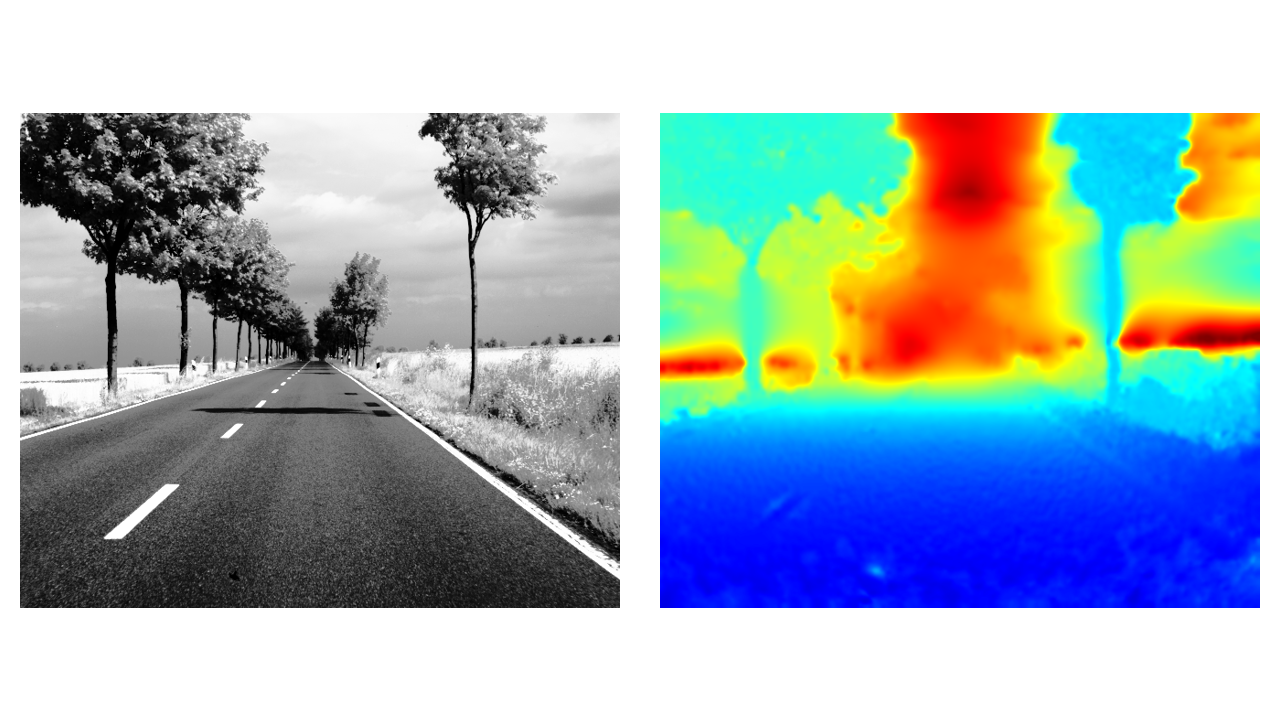

Avenue sequence: This sequence shows a ride with about 100 km/h along a straight avenue lined with trees.

Avenue sequence: This sequence shows a ride with about 100 km/h along a straight avenue lined with trees.

- movie: input sequence and computed depth map [MPG]

- original data: download from HCI Benchmark Database

-

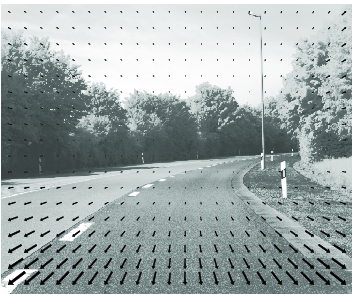

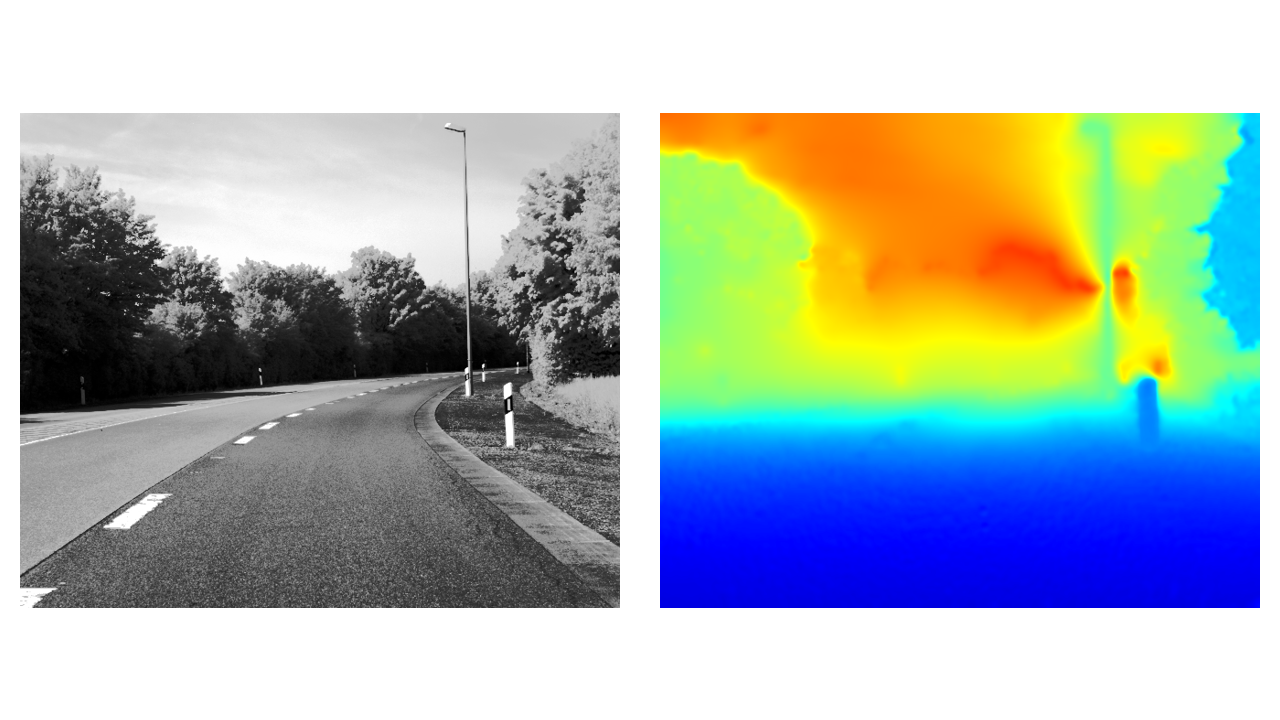

Bend sequence: This sequence shows a ride along a bend at about 70 km/h.

Bend sequence: This sequence shows a ride along a bend at about 70 km/h.

- movie: input sequence and computed depth map [MPG]

- original data: download from HCI Benchmark Database

-

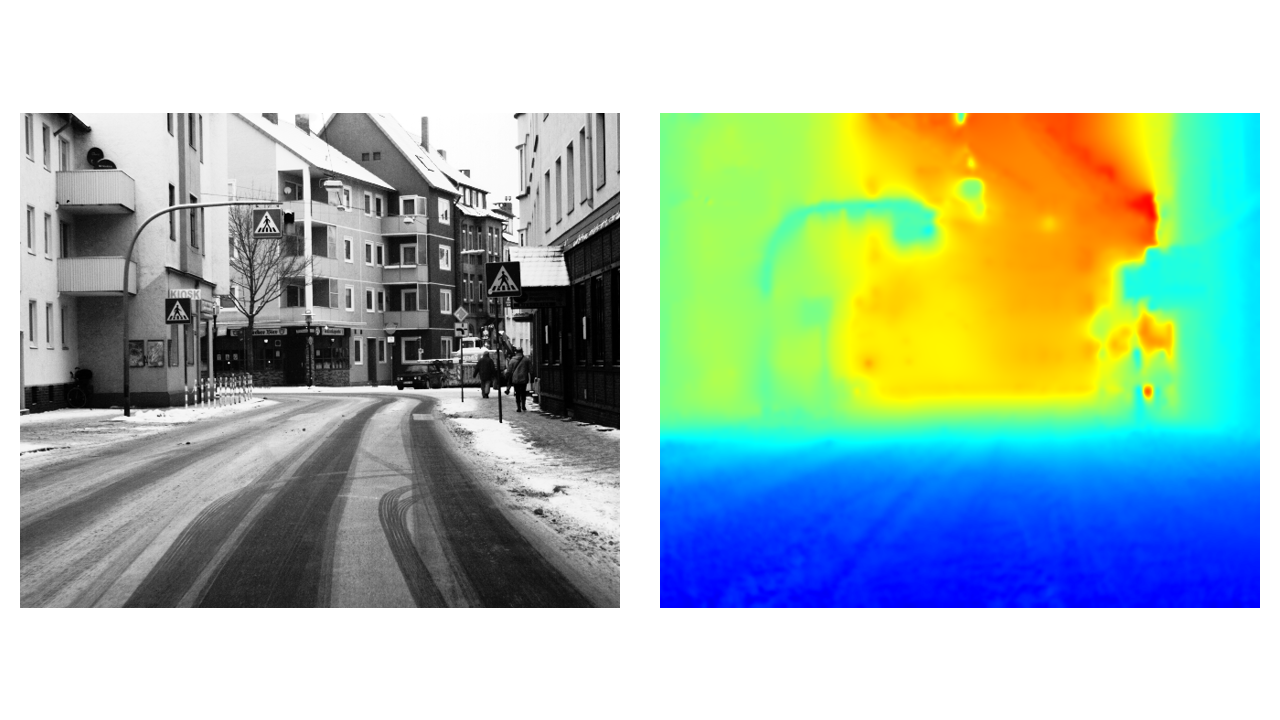

City sequence: This sequence describes a ride through a complex city-center scene at about 40 km/h.

City sequence: This sequence describes a ride through a complex city-center scene at about 40 km/h.

- movie: input sequence and computed depth map [MPG]

- original data: download from HCI Benchmark Database

-

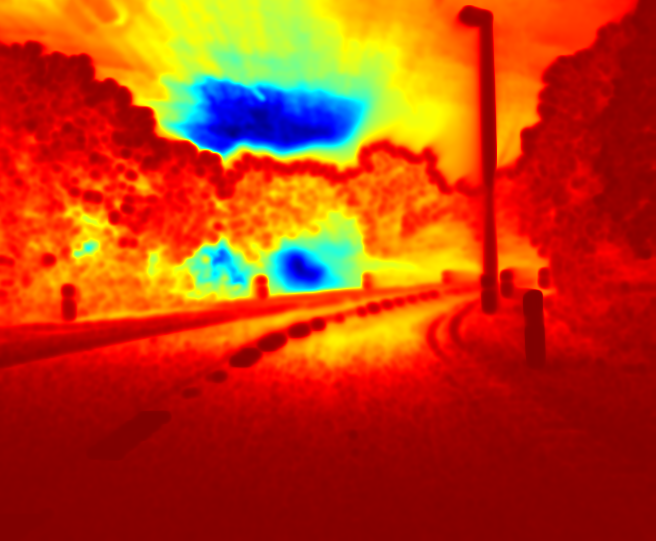

Parking sequence:

This sequences shows a slow and bumpy ride through a parking lot which makes this sequence a very challenging one.

Parking sequence:

This sequences shows a slow and bumpy ride through a parking lot which makes this sequence a very challenging one.

- movie: input sequence and computed depth map [MPG]

- original data: download from HCI Benchmark Database

-

Village sequence:

This sequence shows a ride through a small town at about 50 km/h.

Village sequence:

This sequence shows a ride through a small town at about 50 km/h.

- movie: input sequence and computed depth map [MPG]

- original data: download from HCI Benchmark Database

-

Junction sequence:

This sequence contains a challenging 90 degree turn. Moving objects (cars) significantly violate the assumption of a static scene.

Junction sequence:

This sequence contains a challenging 90 degree turn. Moving objects (cars) significantly violate the assumption of a static scene.

- movie: input sequence and computed depth map [MPG]

-

enpeda sequence:

This synthetic sequence contains moving objects (cars) which significantly violates the assumption of a static scene.

enpeda sequence:

This synthetic sequence contains moving objects (cars) which significantly violates the assumption of a static scene.

- movie: input sequence and computed depth map [MPG]

- original data: sequence 2 of set 2 of the enpeda database (University of Auckland)

Acknowledgments

The research presented here was conducted at the Heidelberg Collaboratory for Image Processing (HCI). HCI is supported by the DFG, Heidelberg University and industrial partners. The authors thank Dr. W. Niehsen, Robert Bosch GmbH.Publications

-

Variational Recursive Joint Estimation of Dense Scene Structure and Camera Motion from Monocular High Speed Traffic Sequences

Florian Becker, Frank Lenzen, Jörg H. Kappes and Christoph Schnörr

In International Journal of Computer Vision, 105:269-297, 2013. Springer.

[PDF (preprint)] [PDF] [supplemental material] [overview] [BIB (bibtex)] -

Variational Recursive Joint Estimation of Dense Scene Structure and Camera Motion from Monocular High Speed Traffic Sequences

Florian Becker, Frank Lenzen, Jörg H. Kappes and Christoph Schnörr

In Proceedings of the 2011 International Conference on Computer Vision, pages 1692-1699, 2011. IEEE Computer Society.

[PDF (preprint)] [PDF] [supplemental material] [overview] [BIB (bibtex)]

This material is presented to ensure timely dissemination of scholarly and technical work. Copyright and all rights therein are retained by authors or by other copyright holders. All persons copying this information are expected to adhere to the terms and constraints invoked by each author's copyright. In most cases, these works may not be reposted without the explicit permission of the copyright holder.

References

-

An Outdoor Stereo Camera System for the Generation of Real-World Benchmark Datasets with Ground Truth

S. Meister, D. Kondermann and B. JähneResults and Supplemental Material

In SPIE Optical Engineering, 51(2), 2012.

[BIB (bibtex)]